목록NMT (1)

while (1): study();

[논문 리뷰] Understanding Back-Translation at Scale

[논문 리뷰] Understanding Back-Translation at Scale

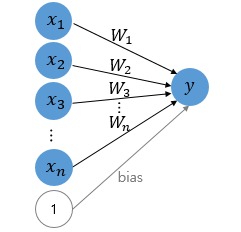

출처: https://arxiv.org/abs/1808.09381 Understanding Back-Translation at Scale An effective method to improve neural machine translation with monolingual data is to augment the parallel training corpus with back-translations of target language sentences. This work broadens the understanding of back-translation and investigates a numb arxiv.org 페이스북과 구글의 공동연구로 2018년 발표한 글입니다. 1. Introduction 기계번역기를..

논문 리뷰

2021. 7. 1. 21:43