목록nlp (3)

while (1): study();

[논문 리뷰] Dual Learning for Machine Translation

[논문 리뷰] Dual Learning for Machine Translation

출처: https://arxiv.org/abs/1611.00179 Dual Learning for Machine Translation While neural machine translation (NMT) is making good progress in the past two years, tens of millions of bilingual sentence pairs are needed for its training. However, human labeling is very costly. To tackle this training data bottleneck, we develop a du arxiv.org 이번에 기계 번역기 구현 다 마치고, colab 환경에서 dual learning을 이용한 fine-..

[논문 리뷰] On Layer Normalization in the Transformer Architecture

[논문 리뷰] On Layer Normalization in the Transformer Architecture

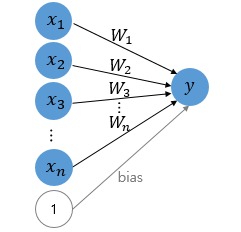

출처: https://arxiv.org/abs/2002.04745 On Layer Normalization in the Transformer Architecture The Transformer is widely used in natural language processing tasks. To train a Transformer however, one usually needs a carefully designed learning rate warm-up stage, which is shown to be crucial to the final performance but will slow down the optimizati arxiv.org 1. Introduction Transformer는 현재 자연어처리 외..

[논문 리뷰] Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation

[논문 리뷰] Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation

ArXiv 링크: https://arxiv.org/abs/1609.08144 Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation Neural Machine Translation (NMT) is an end-to-end learning approach for automated translation, with the potential to overcome many of the weaknesses of conventional phrase-based translation systems. Unfortunately, NMT systems are known to be computationall..